Edge Computing in the US: Optimize Infrastructure

Edge computing in the US is rapidly evolving, driving demand for optimized infrastructure to enhance data processing, reduce latency, and improve operational efficiency across various industries.

In an increasingly data-driven world, the ability to process information closer to its source has become not just an advantage, but a necessity. For businesses operating in the United States, mastering edge computing in the US: 3 ways to optimize your infrastructure is paramount to staying competitive and innovative. This approach moves computation and data storage from centralized cloud environments to the network’s periphery, closer to where data is generated and consumed.

Understanding the Edge Computing Landscape in the US

The United States presents a unique and dynamic landscape for edge computing adoption. Its vast geographical expanse, coupled with a diverse industrial base ranging from manufacturing and agriculture to smart cities and healthcare, creates a fertile ground for decentralized data processing. Understanding these foundational elements is crucial for any organization looking to strategically deploy edge solutions and optimize their infrastructure.

The push towards edge computing is not merely a technological trend; it’s a direct response to the escalating volume of data generated by IoT devices, the imperative for real-time decision-making, and the growing demand for ultra-low latency applications. Think of autonomous vehicles requiring instantaneous processing of sensor data, or smart factories monitoring equipment for predictive maintenance. These scenarios underscore the critical role edge plays in enabling new capabilities and efficiencies.

Drivers of Edge Adoption

Several key factors are accelerating the adoption of edge computing across the US. One significant driver is the proliferation of IoT devices. From industrial sensors to smart home gadgets, these devices produce an unprecedented amount of data. Processing this data at the edge reduces the strain on centralized cloud resources and can significantly cut data transmission costs. Another driver is the demand for real-time insights.

- IoT Proliferation: Millions of connected devices generate vast datasets requiring local processing.

- Latency Sensitivity: Applications like AR/VR, autonomous systems, and real-time analytics demand near-instant responses.

- Bandwidth Constraints: Moving all data to the cloud can overwhelm network bandwidth, especially in remote areas.

- Data Sovereignty and Compliance: Keeping data local can simplify regulatory adherence for sensitive information.

The diverse regulatory environment and regional infrastructure variances within the US also play a significant role. Businesses must navigate these complexities, understanding that what works in a highly connected urban center might need adaptation for a rural utility or a remote agricultural operation. This highlights the need for flexible and scalable edge solutions that can adapt to varying network conditions and operational requirements.

Moreover, security concerns are paramount. Deploying computing power closer to endpoints introduces new vulnerabilities that must be rigorously addressed. Securing edge devices, data in transit, and applications running at the edge requires a comprehensive cybersecurity strategy. As organizations move more critical processes to the edge, the resilience and security of these distributed systems become non-negotiable.

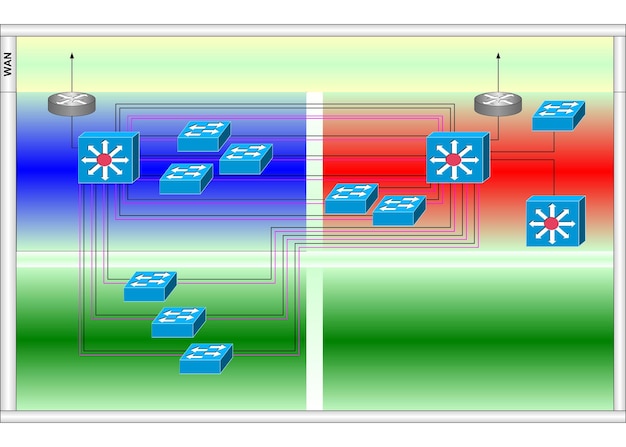

Strategy 1: Optimizing Network Architecture for Edge Deployments

The foundational element for successful edge computing is a robust and intelligent network architecture. Without a network designed to handle distributed workloads and diverse device types, the benefits of edge computing can be severely limited. Optimizing your network for edge deployments involves re-evaluating traditional centralized models and embracing a more decentralized, agile approach.

This optimization begins with understanding the specific demands of your edge applications. Are they latency-sensitive video analytics, high-bandwidth industrial IoT data streams, or mission-critical control systems? Each type of workload will have different network requirements regarding bandwidth, latency, and reliability. Tailoring the network design to these needs is crucial for effective edge operations, especially within the vast and varied US infrastructure.

Implementing 5G and Private Networks

The rollout of 5G infrastructure across the US is a game-changer for edge computing. 5G offers significantly lower latency, higher bandwidth, and increased connection density compared to previous generations. These characteristics make it ideal for connecting edge devices and transmitting processed data back to core systems or other edge nodes. Leveraging 5G for edge connectivity can unlock new possibilities, particularly for mobile edge computing scenarios.

- Enhanced Bandwidth: Supports high-volume data transfer from edge devices.

- Reduced Latency: Critical for real-time applications and quick decision-making.

- Increased Device Density: Connects a larger number of IoT sensors and endpoints.

- Network Slicing: Allows dedicated network resources for specific, critical edge applications.

Private 5G networks represent an even more tailored solution. By establishing a dedicated, localized 5G network within a facility, organizations gain complete control over their connectivity, ensuring guaranteed QoS (Quality of Service) and enhanced security. This is particularly beneficial for industrial settings, smart campuses, or large-scale logistical hubs where reliable and secure local communication is paramount for edge operations.

Beyond wireless, fiber optic networks play a critical role, especially for backhaul from edge locations to regional data centers or the cloud. Ensuring high-speed, low-latency fiber connectivity to key edge sites is essential for effective data aggregation and advanced analytics that can’t be performed solely at the device level. The thoughtful integration of wired and wireless technologies forms the backbone of an optimized edge network.

Strategy 2: Leveraging Micro-Data Centers and Modular Edge Deployments

One of the most practical ways to optimize edge infrastructure is through the strategic deployment of micro-data centers and modular solutions. Unlike traditional, large-scale data centers, these smaller, self-contained units are designed to operate closer to the data source, often in environments not traditionally suited for IT equipment. Their distributed nature is key to reducing latency and enhancing resilience.

Micro-data centers offer a complete IT environment in a compact footprint, including compute, storage, networking, power, and cooling. They can be deployed in a variety of locations, from factory floors and retail stores to remote scientific facilities. This proximity to data-generating devices minimizes the distance data must travel, allowing for real-time processing and immediate action. For US businesses spread across vast geographies, this decentralized approach is a significant advantage.

Benefits of Modular and Containerized Solutions

The adoption of modular and containerized edge solutions brings several operational and financial benefits. These pre-integrated, standardized units simplify deployment, reducing the time and cost associated with setting up edge sites. They are often ruggedized to withstand various environmental conditions, making them suitable for harsher industrial or outdoor settings. Their inherent scalability also means organizations can start small and expand their edge footprint as needed, aligning investments with actual demand.

- Rapid Deployment: Pre-configured units can be quickly installed and activated.

- Scalability: Easily add or remove modules to meet evolving computational needs.

- Environmental Resilience: Designed to operate in a wider range of temperatures and conditions.

- Cost Efficiency: Reduces upfront capital expenditure and operational costs compared to traditional setups.

Power and cooling are critical considerations for any edge deployment, especially for micro-data centers. Integrating efficient cooling systems, uninterruptible power supplies (UPS), and potentially renewable energy sources is essential for maintaining sustained operation and minimizing energy consumption. Many modern modular edge solutions come with integrated thermal management and power distribution, simplifying their deployment and management.

Furthermore, remote monitoring and management capabilities are paramount for managing a distributed fleet of micro-data centers. Given that many edge sites are unstaffed or in remote locations, the ability to monitor performance, diagnose issues, and perform maintenance remotely is crucial. This often involves integrating with centralized management platforms that provide a holistic view of the entire edge infrastructure, ensuring uptime and operational efficiency across the US landscape.

Strategy 3: Implementing Intelligent Edge Orchestration

Optimizing edge computing infrastructure goes beyond hardware and network connectivity; it extends to how these distributed resources are managed and orchestrated. Intelligent edge orchestration is the capability to deploy, manage, monitor, and update applications and infrastructure across hundreds or thousands of edge locations from a centralized platform. This strategy is vital for maintaining operational efficiency and ensuring consistent performance across a disparate edge environment.

Effective orchestration enables organizations to treat their edge infrastructure as a cohesive, programmable entity rather than a collection of isolated silos. This includes managing compute resources, allocating storage, deploying software updates, and ensuring security policies are uniformly applied. Without robust orchestration, scaling edge deployments becomes unwieldy, leading to increased operational costs and potential security vulnerabilities. Especially in the U.S., where regulatory and operational nuances vary by state or region, intelligent orchestration provides invaluable consistency.

Key Components of Edge Orchestration Platforms

A comprehensive edge orchestration platform typically incorporates several key functionalities. These include device lifecycle management, allowing for automated provisioning and decommissioning of edge devices. It also encompasses application deployment and management, enabling developers to push updates and new applications to edge nodes efficiently. Monitoring and analytics are also crucial, providing insights into device health, application performance, and resource utilization.

- Centralized Management: Single pane of glass for all edge devices and applications.

- Automated Deployment: Streamlines software and application rollouts.

- Real-time Monitoring: Provides insights into performance, health, and security.

- Policy Enforcement: Ensures consistent security and operational policies across the edge.

Security automation is another critical aspect of intelligent orchestration. With a growing number of edge devices, manually patching vulnerabilities or updating security configurations is not feasible. Orchestration platforms can automate these tasks, ensuring that all edge endpoints are protected against emerging threats and comply with enterprise security standards. This proactive approach to security is indispensable for safeguarding sensitive data and operations.

Finally, intelligent orchestration platforms often leverage AI and machine learning for predictive maintenance and optimized resource allocation. By analyzing performance data from edge devices, these platforms can anticipate potential hardware failures or network bottlenecks, scheduling maintenance before issues impact operations. They can also dynamically allocate compute and storage resources based on real-time demand, ensuring that critical applications always have the resources they need, contributing significantly to optimized infrastructure in the US.

Addressing Security and Compliance at the Edge

As organizations extend their computing capabilities to the network edge, the attack surface expands dramatically, presenting new security and compliance challenges. Each edge device, whether a sensor, gateway, or micro-data center, becomes a potential entry point for malicious actors. Therefore, integrating robust security measures and ensuring regulatory compliance must be a core consideration from the initial planning stages of edge deployments, especially within the diverse legal landscape of the US.

A multi-layered security approach is essential. This begins with securing the physical edge devices themselves, ensuring they are tamper-resistant and that only authorized personnel have access. Beyond physical security, logical controls such as strong authentication mechanisms, encryption for data at rest and in transit, and strict access controls are paramount. The distributed nature of edge deployments requires a shift from traditional perimeter-based security models to a zero-trust architecture, where every device and user must be verified before granting access.

Key Security Practices for Edge and Compliance

Implementing a comprehensive security strategy for edge computing involves several key practices. Regular patching and software updates are crucial for addressing known vulnerabilities in edge device operating systems and applications. This can be challenging for remote or numerous edge devices, highlighting the need for automated update mechanisms, often facilitated by intelligent orchestration platforms. Continuous monitoring for anomalies and suspicious activities is also vital for early threat detection.

- Zero-Trust Architecture: Verify every access request, regardless of origin.

- Data Encryption: Protect data at rest and in transit between edge and cloud.

- Automated Patching: Ensure regular updates for all edge software and firmware.

- Endpoint Protection: Deploy anti-malware and intrusion detection on edge devices.

Compliance with industry-specific regulations (e.g., HIPAA for healthcare, NERC-CIP for critical infrastructure) and general data privacy laws (e.g., CCPA for California) adds another layer of complexity for US-based edge deployments. Edge solutions must be designed to store and process data in a manner that aligns with these legal requirements, which may involve data localization, specific data retention policies, and stringent auditing capabilities. This often means processing sensitive data directly at the edge to avoid transmitting it across less secure networks or storing it in distant cloud data centers that might not comply with local regulations.

Furthermore, incident response planning for edge environments requires careful consideration. In the event of a security breach or operational failure at an edge node, organizations must have clear protocols for detection, containment, eradication, and recovery. This includes capabilities for remote diagnostics and remediation, as well as offline operation modes for critical edge applications to maintain business continuity even when connectivity is compromised. Proactive risk assessment and continuous security audits are vital components of a resilient edge strategy.

Future Trends and Best Practices in US Edge Computing

The landscape of edge computing in the US is continuously evolving, driven by advancements in technology and changing business needs. Staying abreast of emerging trends and adopting best practices is essential for organizations to maximize their edge investments and maintain a competitive edge. The future of edge computing is likely to be characterized by greater integration with AI, increased automation, and more sustainable deployment models.

One significant trend is the convergence of AI and edge computing, often referred to as “AI at the Edge.” Deploying AI/ML models directly on edge devices enables real-time inferencing without requiring constant connectivity to the cloud. This is particularly impactful for applications like computer vision in manufacturing, predictive analytics in smart cities, and natural language processing on personal devices. The reduced latency and enhanced privacy offered by edge AI are significant drivers of innovation.

Adopting Sustainable Edge Practices

As edge deployments proliferate, their environmental impact becomes a growing concern. Adopting sustainable practices for edge infrastructure is not just responsible but can also lead to cost savings. This includes optimizing power consumption for edge devices and micro-data centers, exploring renewable energy sources for remote deployments, and considering the full lifecycle impact of hardware, from manufacturing to disposal. In the US, with its strong emphasis on environmental stewardship, this is becoming an increasingly important consideration for corporations.

- Energy Efficiency: Utilize low-power components and efficient cooling solutions.

- Renewable Energy Integration: Power remote edge sites with solar or wind.

- Hardware Lifecycle Management: Focus on repair, reuse, and responsible recycling.

- Circular Economy Principles: Design edge solutions for minimal waste and maximum resource utilization.

Another emerging best practice is the adoption of open standards and interoperability. As edge ecosystems become more complex, relying on proprietary solutions can lead to vendor lock-in and limit flexibility. Embracing open-source software, standardized APIs, and interoperable hardware components promotes a more agile and future-proof edge infrastructure. This allows organizations to mix and match solutions from different providers, optimizing for cost, performance, and specific application requirements.

Finally, the human element remains crucial. While automation and intelligent orchestration reduce the need for on-site personnel, developing specialized skills in edge architecture, security, and application development is paramount. Investing in training and fostering cross-functional teams that can bridge IT, OT (Operational Technology), and business departments will be key to successfully implementing and managing complex edge solutions. The focus will shift from managing individual devices to orchestrating entire distributed systems for maximal strategic gain.

Measuring Success and Scaling Edge Initiatives

Successfully deploying edge computing infrastructure is only half the battle; the other half lies in effectively measuring its impact and strategically scaling initiatives based on demonstrable value. Defining clear key performance indicators (KPIs) and establishing robust monitoring frameworks are crucial for understanding the return on investment (ROI) and identifying areas for further optimization. For businesses in the United States, proving the tangible benefits of edge is essential for securing continued investment and broader adoption.

Metrics for success can vary widely depending on the specific edge use case. For a manufacturing plant, KPIs might include reduced downtime due to predictive maintenance, increased production throughput, or lower energy consumption. For a retail chain, it could be faster customer checkout times or enhanced personalized shopping experiences. The key is to align edge project goals with broader business objectives and track their contribution to those outcomes.

Scaling Edge Deployments Responsibly

Once initial edge deployments demonstrate value, the challenge shifts to scaling these initiatives across more locations or for additional applications. Responsible scaling involves a phased approach, learning from early deployments, and incrementally expanding. It also necessitates a clear understanding of the total cost of ownership (TCO) for edge infrastructure, including hardware, software, connectivity, and ongoing operational expenses. This financial oversight is critical for sustainable growth.

- Pilot Programs: Start with small, controlled deployments to validate concepts.

- Iterative Expansion: Gradually scale based on lessons learned and proven value.

- Cost-Benefit Analysis: Continuously evaluate ROI and TCO for each expansion phase.

- Standardization: Implement common architectures and processes for repeatable deployments.

Automation plays a pivotal role in scaling edge deployments efficiently. From automated provisioning of new edge devices to continuous integration/continuous delivery (CI/CD) pipelines for edge applications, minimizing manual intervention reduces errors and accelerates deployment cycles. This level of automation ensures consistency across a growing fleet of edge nodes, which can be geographically dispersed throughout the US, minimizing the need for extensive on-site IT support.

Furthermore, building a strong ecosystem of partners—including hardware providers, software vendors, and system integrators—can significantly ease the burden of scaling. Collaborating with trusted partners who have expertise in various aspects of edge technology allows organizations to leverage external capabilities and accelerate their edge journey, transforming complex challenges into manageable, scalable solutions that drive tangible business results across diverse industries.

| Key Optimization | Brief Description |

|---|---|

| 🚀 Network Architecture | Redesigning networks with 5G/private networks for lower latency and higher bandwidth vital for edge data. |

| 📦 Modular Deployments | Utilizing micro-data centers for rapid, scalable, and resilient deployment closer to data sources. |

| 🧠 Intelligent Orchestration | Centralized management and automation for deploying, monitoring, and updating distributed edge resources efficiently. |

| 🔒 Security & Compliance | Implementing robust security measures and ensuring regulatory adherence across all edge devices and data. |

Frequently Asked Questions About Edge Computing in the US

▼

Edge computing involves processing data closer to its source, rather than sending it all to a centralized cloud or data center. This proximity minimizes latency, conserves bandwidth, and enables real-time decision-making for applications like IoT devices and autonomous systems. It shifts the computational load to the network’s periphery.

▼

For US businesses, edge computing is crucial due to the vast geographical spread and diverse industrial needs. It addresses latency issues for critical applications, reduces data transmission costs, enhances data security by keeping sensitive information local, and ensures compliance with regional data regulations, fostering innovation and efficiency.

▼

5G significantly boosts edge computing by providing ultra-low latency, higher bandwidth, and increased connection density. This enables faster data transfer between edge devices and localized processing units, supporting real-time applications like autonomous vehicles and industrial automation, and expanding the reach of edge deployments across varied US environments.

▼

Micro-data centers are compact, self-contained IT infrastructures that include compute, storage, networking, power, and cooling. Designed for deployment at the network edge, they bring computation closer to data sources, improving efficiency, reducing latency, and simplifying localized IT management in diverse environments from factories to remote offices.

▼

Edge orchestration centrally manages, monitors, and deploys applications and resources across distributed edge infrastructure. It’s necessary for scalable edge solutions as it automates tasks, ensures consistent security policies, and provides a unified view of disparate edge nodes, preventing operational complexities and enabling efficient scaling for numerous localized deployments.

Conclusion

Optimizing edge computing infrastructure in the US is not a one-time task but a continuous journey of strategic planning, technological adoption, and vigilant management. By focusing on network architecture, embracing modular deployments, and implementing intelligent orchestration, organizations can build a resilient, efficient, and future-proof edge environment. These strategies, coupled with rigorous security protocols and a keen eye on emerging trends, pave the way for businesses to fully harness the transformative power of edge computing, driving innovation, enhancing operational efficiency, and securing a competitive advantage in the digital age.